Biorobotics Laboratory BioRob

Aïsha Hitz - Master Project Winter 2007-2008

Synchronization of movements of a real humanoid robot with music

Assistants: Sarah Degallier, Ludovic Righetti

Abstract:

When listening to music, people intuitively detected a certain tempo and move their body to the rhythm of the song. They are influenced by an external signal that is the music and adapt their movement to its tempo. This behavior could be imitated using oscillators, dynamical systems that have the capability to synchronize to an external signal, which is the music signal characterized by its frequency and its phase. As the frequency represents the tempo, the phase symbolizes the beats that are detected by people, when tapping to the rhythm of a song. Like people, oscillators should then adapt their behavior to the music, when modifying their frequency and their phase. So the aim of this project was to detect the tempo of any song with adaptive frequency oscillators developed by L. Righetti and to transmit this tempo to the drumming controller written by S. Degallier.

Adaptive frequency oscillators:

The first part of the project consisted in studying oscillators and especially their synchronization to an external signal. Adaptation is added to the Hopf oscillators thanks to a third equation describing the evolution of the oscillator frequency. The oscillator frequency evolves then toward the frequency of the external input signal, that is here a music song.

The advantage of these adaptive frequency oscillators is their continuous adaptation. As long as there is an external signal applied to them, they adapt their frequency. One of the purposes to do then the detection on-line is the change in tempo that can happen in a song.

Simulations:

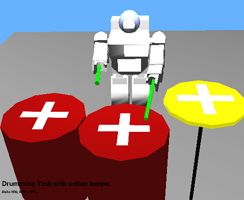

After testing the feasibility of using the adaptive frequency oscillators to tempo detection, an implementation on the humanoid robot in Webots and then on the real humanoid robot Hoap2 was considered. The results can be viewed with these simulations.

Report and presentation

Report:

Related works

Papers:

Simulations with music

Implementation on the real robot, with different songs:

- Archived student projects

- Alain Dysli

- Alexandre Tuleu

- Anurag Tripathi

- Ariane Pasquier

- Aïsha Hitz

- Barthélémy von Haller

- Benjamin Fankhauser

- Benoit Rat

- Bertrand Mesot

- Biljana Petreska

- Brian Jimenez

- Christian Lathion

- Christophe Richon

- Cédric Favre

- Daisy Lachat

- Daniel Marbach

- Daniel Marbach

- Elia Palme

- Elmar Dittrich

- Etienne Dysli

- Fabrizio Patuzzo

- Fritz Menzer

- Giorgio Brambilla

- Ivan Kviatkevitch

- Jean-Christophe Fillion-Robin

- Jean-Philippe Egger

- Jennifer Meinen

- Jesse van den Kieboom

- Jocelyne Lotfi

- Julia Jesse

- Julien Gagnet

- Julien Nicolas

- Julien Ruffin

- Jérôme Braure

- Jérôme Guerra

- Jérôme Maye

- Jérôme Maye

- Kevin Drapel & Cyril Jaquier

- Kevin Drapel & Cyril Jaquier

- Loïc Matthey

- Ludovic Righetti

- Lukas Benda

- Lukas Hohl

- Lukas Hohl

- Marc-Antoine Nüssli

- Martin Biehl

- Martin Riess

- Martin Rumo

- Mathieu Salzmann

- Matteo Thomas de Giacomi

- Matteo Thomas de Giacomi

- Michael Gerber

- Michel Ganguin

- Michel Yerly

- Mikaël Mayer

- Muhamed Mehmedinovic

- Neha Priyadarshini Garg

- Nicolas Delieutraz

- Panteleimon Zotos

- Pascal Cominoli

- Pascal Cominoli

- Patrick Amstutz

- Pedro Lopez Estepa

- Pierre-Arnaud Guyot

- Rafael Arco Arredondo

- Raphaël Haberer-Proust

- Rico Möckel

- Sacha Contantinescu

- Sandra Wieser

- Sarah Marthe

- Simon Blanchoud

- Simon Capern

- Simon Lépine

- Simon Ruffieux

- Simon Rutishauser

- Stephan Singh

- Stéphane Mojon

- Stéphane Mojon

- Sébastian Gay

- Vlad Trifa

- Yvan Bourquin